Using Yarn v2 workspace, Docker Compose and Visual Studio Code to facilitate development of JavaScript monorepos

This article describes the tooling setup for a local development environment I recently created that brings together the following tools and technologies:

- Docker Compose.

- The following features of Yarn v2:

- The following features of Visual Studio Code:

- The Remote Container Extension.

- IntelliSense provided by the TypeScript language server.

- The Prettier formatter extension.

- TypeScript (and by extension, JavaScript, of which TypScript is a strict superset).

- Webpack.

- Babel.

- ESLint.

- Prettier.

- Jest.

The article only provides a high-level overview because I have provided extensive commentary inside the codebase on GitHub. The intended audience are experienced developers who have used most of the above tools and technologies in isolation and are looking to wrangle them together. Even though this article only discusses TypeScript/JavaScript, I’ve created another repo showing how to set up a Docker Compose-based development and debugging environment for Python using VS Code’s Remote Container Extension.

Because I’ll most likely find ways to improve this setup when I use it for my own development work and because the integration between the various tools in this setup is constantly improving, I expect to revise this article multiple times in the future.

Motivation

This setup facilitates the development of JavaScript monorepos, which promote a high degree of coherence and consistency between various parts of a codebase in terms of tooling, dependencies and business logic.

For example, in a library like Babel, coherence between the core processing pipeline (@babel/parser, @babel/generator), input/output adapters (such as @babel/cli) and plugins (@babel/@preset-env) ensures that the entire ecosystem moves in lock step when new JavaScript language features are released.

In particular, isomorphic full-stack web applications (single-page front-end React applications that use server-side rendering and client-side hydration) that follow the 12-factor app philosophy greatly benefit from this setup.

The monorepo structure ensures that the front- and back-ends both use the same version of React to avoid hydration failure due to version mismatch.

The ability to develop out of Docker containers with the same ease of doing it on the host machine enables the local development environment to closely resemble the production environment, achieving dev/prod parity. Because JavaScript dependencies and system tools needed by the app can be vendored into the Docker image and Docker Compose configurations, no implicit dependencies “leak in” from the surrounding environment. As an added bonus, this complete capture of all environmental dependencies ensures consistency in the development environment of current developers and simplifies the onboarding of new developers. Instead of running many configuration commands to configure the local machines’ environments (where each command can produce different results or errors due to differences between individual machines), new developers can check out the code base, run a few Docker commands that have predictable results and start developing right away in a highly uniform and controlled environment of Docker containers.

Demo application

To show how this setup works at a practical level, a demo JavaScript full-stack web application is included. This demo application is an interactive in-browser explorer of the Star Wars API, which contains the data about all the Star Wars films, characters, spaceships and so on. If you want to quickly try the application out, here is the online version created by the GraphQL team.

In keeping with the purpose of this article, the demo application’s functionality is deliberately kept simple to avoid distracting from the tooling.

All the application code is contained in only three files (front-end/src/App.tsx, front-end/src/index.tsx and back-end/src/index.ts).

The rest are tooling configurations.

As a believer in a minimalist approach to tooling configurations, I have made every attempt to only include the absolute minimum amount of configuration necessary for the functionality of these tools.

The front-end’s run time dependencies are:

reactandreact-domare the UI framework.graphiqlprovides the GraphQL interactive explorer. It has two peer dependencies that are included but not used directly:prop-typesandgraphql.

The back-end’s run time dependencies are:

expressis the web server.express-graphqlis the Express middleware for GraphQL.swapi-graphql-schemacontains the GraphQL schema and resolver.graphqlis a peer dependency ofswapi-graphql-schemaandexpress-graphql.corstakes care of CORS issues in local development.

Codebase structure

The code base is structured as a monorepo containing two Yarn workspaces:

- The

front-endworkspace (packages/front-end) is a React application that contains a single GraphiQL component, which provides the interactive user interface. - The

back-endworkspace (packages/back-end) is an Express server that serves up a GraphQL API using theexpress-graphqlmiddleware. This GraphQL API is a wrapper around the original REST-ful Star Wars API. The GraphQL schema and resolvers has been forked and improved (tooling-wise) by me to make it possible to use inside this application. In keeping with the 12-factor philosophy, the back-end remains agnostic of its surrounding by listening on a single port (8000) and listening at the root path (/) instead of/apior/graphql. Note that althoughexpress-graphqlis capable of serving up its own GraphiQL editor (by setting thegraphiqloption totrue), thereby removing the need for a separate front-end, I choose to create a separate front-end for demonstration purpose.

Yarn v2

Version 2 of the Yarn package manager, currently in relase candidate status, brings genuine innovations to the JavaScript package system, many of which are covered in its release announcement. Two of those innovations are noteworthy.

The first innovation is workspaces, which was first introduced in version 1 but only becomes truly usefeul for Docker in version 2. By allowing developers to transparently work with monorepos, workspaces provide a high degree of coherence to a project because multiple related parts can exist in the same monorepo and cross reference each other, allowing any changes in one part to be instantly applied to the others.

Prior to Yarn v2, it was not possible to fully Dockerize a workspace-based monorepo because workspace was implemented with symlinks, which do not work in a Docker image.

However, Yarn v2 workspaces do not use symlinks.

Instead, they use Plug’n’Play (PnP), the second innovation I want to mention.

Roughly speaking, PnP modifies NodeJS’s module resolution strategy so that when Node requires a module, instead of making multiple file system calls to look for that module, Node is directed by Yarn to to look in a central Yarn cache and can obtain that module with a single call.

This vastly improves the performance during installation and runtime module loading.

However, this feature does interfere with the working of two kind of development tools: those whose dependency loading depends on file layout on disk instead of proper declaration of dependencies in their package.json (e.g. fork-ts-checker-webpack-plugin) or tools that implement their own module resolution strategy (e.g. eslint).

When I use the term Yarn v2 workspace in this article, I mean workspace and PnP.

Note that although you will see a few node_modules directories appear after running yarn build or yarn develop in a workspace, these directories do not contain library code.

Rather, they contain the local cache of Terser, Webpack’s default code minifier.

With PnP, no module code is loaded from node_modules directories.

This setup also includes the @yarnpkg/plugin-workspace-tools.

The plugin allows execution of a yarn task across multiple workspaces e.g. yarn workspaces foreach build will run the yarn build task for each workspace.

It is not absolutely essential for the functioning of the setup but is nevertheless added to demonstrate how to include Yarn plugins.

Docker image

As stated in the motivation section, the Docker image was created with the core principle that it should:

- Capture within the image as much of the npm dependencies, Yarn settings and system configuration as possible that are necessary to develop and run the code using Yarn v2 inside the container, and

- Read and write as little as possible from and to the codebase’s directory.

In fact, the only information the Docker build process reads from the codebase is the root

yarn.lockand thepackage.jsons inside the root and child workspaces.

The Docker image achieves this by taking full advantage of Yarn v2’s high configurability by providing two configuration files:

.yarnrc.yml(Yarn v2’s main configuration file) is “lifted” out of the codebase volume mount at/srv/app1 into its parent directory/srv(as seen from the container’s side). Because.yarnrc.ymlis only used by Yarn v2, not Yarn v1 or npm, the lifting of.yarnrc.ymlinto the parent directory still allows Yarn v2 on the container side to find its configuration while not interfering with the operation of npm or Yarn v1 on the host side. This.yarnrc.ymlcontains important settings to further isolate Yarn v2 from the codebase directory:- The

cacheFolder,globalFolder,virtualFolderandpnpUnpluggedFoldersettings tell Yarn to store the npm dependencies into a/yarndirectory outside the codebase directory instead of into the default.yarndirectory inside the codebase directory. - The

pluginssettings tells Yarn where to find its plugins, which have already been downloaded and stored into/yarnby a previous build step. As mentioned above, the only plugin I use is@yarnpkg/workspace-tools.

- The

- A

.yarnrcfile (different from.yarnrc.yml) that contains theyarn-pathsetting, which instructs the system-wide Yarn, which can either be Yarn v1 or v2 (in this case v1 because that’s the version that’s shipped in the base NodeJS Docker image), where to find the Yarn executable for the current codebase. Because there’s only one codebase inside the Docker container and the container is run under the non-root usernodeper best practice, I’ve chosen to put this.yarnrcfile in thenodeuser’s home directory.

The only Yarn-related files that are not captured in the Docker image are yarn.lock and .pnp.js because their locations are not configurable.

The presence of these two files in the codebase directory means that it’s not possible to use both Yarn v2 on the container side and Yarn v1 on the host side although there currently is a ticket to to fix that.

However, it should be possible to use Yarn v2 on the container side and npm on the host side.

Last but not least, just like all npm dependencies in this codebase, the version of Yarn and its plugins as well as the tags of all base Docker images are pinned down exactly to ensure development environment stability and reproducibility.

Docker Compose services

The docker-compose.yml file defines three services.

One is base.

It saves me from typing out a long docker build command with a long list of build arguments and tags the resulting Docker image with the same name that will be used by the other two services, front-end and back-end.

Note that both the front-end and back-end services share the same volume mounts.

It’s not surprising that they share the volume mount for the source code.

The more remarkable thing is that they can also share same volume mount for the Yarn cache.

Because of PnP, Yarn v2 is able to centralize all dependencies across all workspaces into a single cache directory regardless of whether the dependencies are shared or not shared among the workspaces.

This was not possible for workspaces in v1 because Yarn v1 only “hoists” shared dependencies out of child workspaces’ node_modules directories into the node_modules directory in the root workspace.

If a Yarn v1 monorepo consists of child workspaces, the same setup would requires anonymous Docker volumes (one for each child workspace’s node_module plus one for the root’s node_modules) whereas a v2 monorepo only needs a single volume.

Another disadvantage of Yarn v1 is that lifting node_modules directories from within the codebase directory into a Docker container using volume mounts is unreliable due to a long-running bug in Docker.

Here are a few useful Docker Compose commands in this codebase:

- To start the front-end and back-end containers in development mode, run

docker-compose up -d. - To tail the interleaved logs of the front-end and back-end containers, run

docker-compose logs --tail=all -f. - To shut down the web application, run

docker-compose down. - To delete the anonymous Docker volumes associated with this Docker Compose config (useful for troubleshooting), run:

1docker volume rm $$(docker volume ls -f "name=yarn-v2-workspace-docker-vs-code" --format "{{.Name}}")

VS Code’s Remote Containers Extension

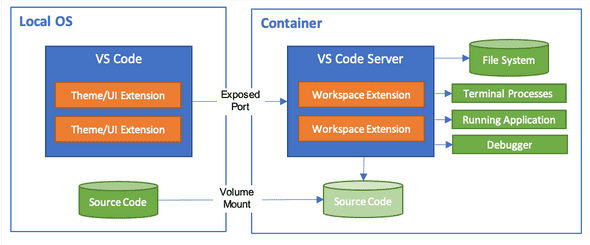

The major enabling factor for my workflow is VS Code’s Remote Containers extension. The extension allows you to have the same development experience inside a container as you would have on your host machine. As explained by this diagram from the extension’s documentation, it works by installing a server (and other VS Code extensions if you so wish) into the Docker container while keeping the editor’s user interface fully on the host side. Application source code is shared between the host and the container through a volume mount:

This approach has two advantages:

- Running the VS Code server and extensions inside the container guarantees that the way the code is executed in development the same way as it would be during production. Furthermore, running Visual Studio Code extensions for important development tasks such as linting, type checking and debugging inside the container ensure that these tasks perform exactly the same way on different developers’ machines.

- Keeping the editor’s graphical interface on the host side helps maintain fast and responsive performance, avoiding the lag commonly associated with a remote desktop solution such as X11 forwarding.

The importance of being able to develop from inside containers and the demonstrated usefulness of VS Code’s Remote Containers Extension have recently prompted Facebook to retire their own unified development environment for the Atom editor in order to join force with Microsoft to further develop VS Code’s Remote Containers Extensions. There is currently an item on the extension’s road map for supporting Kubernetes, which will be very exciting if released.

The configuration for a remote developer container is specified in a devcontainer.json file in the project directory.

However, because I have two containers, I need separate vs-code-container-back-end/.devcontainer.json for the back-end and vs-code-container-front-end/.devcontainer.json for the front-end

(note the leading dots in the file names).

There are a few important settings in these files:

dockerComposeFile: an array of one or moredocker-compose.ymlfiles, including overrides. For example,front-end.docker-compose.ymloverrides certain settings specific to the front-end service in the basedocker-compose.ymlservice: which service VS Code should attach itself to for remote development.extensions: a list of any VS Code extensions that should be installed into the container. Although some VS Code extensions run on the host side (the Vim plugin for example), most are run in the remote container and thus has to be specified here. In this codebase, the Prettier extension is installed into the development containers.

I suggest that you exclude the files mentioned in this section from version control in a real-world project because they mostly contain settings that are more personal to each developer, such as what VS Code extensions to install or what Linux shell to use.

This is in contrast to Dockerfile and docker-compose.yml, which need to be consistent across all developers because they are important to the correct functioning of the codebase.

TypeScript

TypeScript is chosen as the programming language to show how it can work seamlessly with Yarn v2 workspace and VS Code with some configuration.

If you choose to use JavaScript, things only get easier and you can ignore the discussion in this section.

Putting aside editor integration for a moment, using TypeScript, as usual, requires installing the typescript package and type definitions for libraries that don’t already include them, such as @types/react and @types/react-dom.

Because Babel already supports workspace, I decide to use it to transpile from TypeScript to JavaScript through @babel/preset-typescript for both Webpack and Jest.

However, because Babel only transpile code without doing type checking, it’s necessary to add a few more ingredients:

- In development mode: add the

fork-ts-checker-webpack-pluginto the Webpack config, which watches TypeScript files and performs type checking on a separate thread. This plugin does require extra settings in.yarnrc.ymlbecause it doesn’t declare its peer dependencies properly. - In production mode: run

tsc --noEmit, which invokes the TypeScript compiler to perform type checking without emitting any code, before starting a build with Webpack. Thefork-ts-checker-webpack-pluginis not used in a production build because if type checking fails, I want the build task to also fail synchronously.

Note that you don’t really need the @yarnpkg/typescript-plugin.

If installed, it will helpfully install the corresponding type definitions from DefinitelyTyped for you when you yarn install a package.

However, I find this help a bit annoying because when I install a tooling dependency like Prettier, the plugin will also install the unnecessary type definition @types/prettier.

You can safely ignore this plugin if you manually install type definitions for your runtime dependencies.

Thanksfully, VS Code’s IntelliSense will definitely remind you in case you forget.

Babel

Babel works very well with workspace and no special configuration is needed. Here are the Babel-related dependencies:

@babel/coreis the main Babel engine,@babel/preset-envtranspiles ES module to Common JS for Jest. This is not necessary on the back-end.@babel/preset-typescripttranspiles TypeScript to JavaScript.@babel/preset-reacttranspiles JSX in the front-end.

Webpack

Webpack works quite well with PnP. The only changes I need to make in the Webpack config compared to a regular config are:

- In Webpack config, add the

pnp-webpack-pluginas instructed here. - In Webpack config, reference the

babel-loaderasrequire.resolve("babel-loader")instead of just"babel-loader". - Invoke Webpack with

webpack-cliinstead ofwebpackdue to a bug in Webpack.

The front-end is served in development mode by webpack-dev-server and in production mode by the serve package.

Because the back-end is already a server, I don’t use webpack-dev-server in the back-end’s Webpack development mode config.

Rather, I use:

- Webpack CLI’s

--watchmode to recompile when modified. nodemon-webpack-pluginto runnodemonon the build output and restart the server automatically when the output change.

Other dependencies necessary for Webpack’s functionality are:

css-loaderandstyle-loaderare Webpack loaders for CSS.html-webpack-plugingenerates the index HTML file.

Prettier

Prettier works well both standalone on the command line and integrated as a VS Code Plugin.

Because I use the default Prettier settings, no configuration file is needed.

However, a .prettierignore file is added to exclude certain directories, such as node_modules and coverage (Jest’s code coverage output).

The only dependency necessary is the package prettier itself.

ESLint

ESLint takes a bit of work to work with workspaces.

Running ESLint on the command line works fine except when I want to use preset configurations.

Some shared configurations work out of the box, such as plugin:react/recommended (the recommended config from eslint-plugin-react).

Other preset configs, such as eslint:recommended or prettier, require manually copying the configs into a local directory and telling ESLint where to find them with a relative path.

Fortunately, because ESLint configs are either JSON files or CommonJS modules that export a plain JavaScript object, adapting them didn’t take too much effort.

However, I do look forward to the day I can just use these configs out of the box the way they are intended to.

In the mean time, I’ll monitor the relevant GitHub issues here and here.

This codebase uses both Prettier and ESLint to demonstrate how the linting responsibility should be shared among these tools.

Prettier checks for strictly code styling-related issues, such as trailing commas and quotes.

ESLint checks for more substantive issues such as checking that for loops’ counter variables move in the right direction to avoid infinite loops.

The dependencies required to run ESLint are:

eslintis the executable.eslint-plugin-reactcontains rules dealing with React and JSX.@typescript-eslint/{eslint-plugin,parser}allows ESLint to work with TypeScript code.- All the configurations contained in the

eslint-configsdirectory. The comments in them will tell you where they came from originally.

VS Code integration with TypeScript Intellisense and Prettier

Getting Intellisense (provided by the TypeScript language service) and Prettier to work in VS Code wasn’t too hard with PnPify, a tool designed to smooth over incompatibility between PnP and various tools in the JavaScript ecosystem.

Here are the instructions.

typescript and prettier have to be installed as a dev dependency in the top-level workspace.

This is necessary because PnPify will scan the top-level package.json to figure out what tools it needs to generate configurations for.

Currently PnPify can generate configurations for TypeScript, Prettier and ESLint.

These configurations, which essentially tell VS Code where to find executables that have been modified by PnPify for TypeScript and Prettier, are stored in the .vscode/pnpify directory in the top-level workspace.

These modified executable are wrappers around the real executables and perform some initial setup to make they play nice with PnP.

The repo already contains the result of these steps so you don’t have to run them.

To run Prettier on a file, just choose “Format Document” from VS Code’s Command Palette.

I couldn’t get the VS Code ESLint plugin to work but you can always run it on the command line.

An outstanding issue with TypeScript IntelliSense is that hitting F12 (“Go to definition”) on an identifier in a TypeScript file will not take me to its TypeScript definition. This is caused by Yarn’s storage of npm packages as zip files (see GitHub issue) and a fix for it is on VS Code’s road map.

Jest

Because I use Babel to transpile both TypeScript application code and test code, Jest works out of the box without any special setup.

To demonstrate that testing with Jest works, the front-end has a single smoke test to ensure that the UI renders without error into a JSDOM environment.

The test uses @testing-library/react to ensure that user-facing text elements in the UI (such as the “Prettify” and “Merge” buttons) are present in the markup when rendered in this mock DOM.

I might add more realistic tests such as visual regression tests with Cypress in the future.

Other installed dependencies that are necessary for testing are:

jestis the testing framework.@testing-library/jest-domadds Jest assertions on DOM elements, such as.toBeInTheDocument().@types/testing-library__{jest-dom,react}are the TypeScript definitions for the two@testing-librarypackages because tests are written in TypeScript.

Other libraries

- Storybook v6 (currently in beta) fully supports Yarn v2 workspaces. Here is the migration guide from v5 to v6. The migration was straightforward for me personally.

Notes on generating the initial yarn.lock

Suppose that you are trying to apply this setup to a new codebase on a machine that has Yarn v1 installed system-wide, as is the norm. Because the lock file generated and used by Yarn v2 (which lives on the container side) is not compatible with that generated and used by Yarn v1 (which lives on the host side), the Docker build will fail because:

- The

cp yarn.lock [...]command in the first stage will fail becauseyarn.lockdoes not exist. - The

yarn --immutablecommand at the very end will also fail becauseyarn.lockneeds to be modified i.e. created (yarn installis deliberately run with the--immutableflag to warn you if the lock file is out of date).

However, because the build fails due to a missing v2 lock file, you have no way of creating a working environment in which to invoke Yarn v2 to generate the v2 lock file. This is a chicken and egg problem. The solution is:

- In

Dockerfile, replace thecp yarn.lock ../manifests/step in the first build stage withcp yarn.lock ../manifests/ 2>/dev/null || :. This allowscpto not fail in the absence ofyarn.lock. - In

Dockerfile, deleteyarn --immutableat the end. - Run

docker-compose build base. - Run

docker-compose run --rm -v $(pwd):/srv/app:delegated base yarn. This will generate the v2yarn.lockin the project directory (along with a.pnp.jsfile that is.gitignored). - Remember to revert the above modifications to

Dockerfile.

- “Why the odd location

srv/app,” you might ask? According to the Filesystem Hierarchy Standard, ”/srvcontains site-specific data which is served by this system,” which is a good fit for a web app. I learned this from an article by John Lees-Miller.↩