Writing plugins for remark and gatsby-transformer-remark (part 1)

Note: This is part one of a three-part tutorial. Here are part two and part three.

Motivation

After a year-long hiatus due to work schedule, I wanted to get back into writing again. The first thing I did was to change the technology stack of my website to save money and improve its performance. Previously, the site was built by Grav, a PHP-based flat-file CMS, and served from a VPS hosted by InMotion Hosting, which costs some amount of money each month. Now I’ve switched to building my website with Gatsby, a React-based static site generator, and serving it from Netlify’s CDN.

One problem I encountered with my new blog was how to insert code snippets from GitHub repositories I may or may not own (henceforth referred to as foreign repos) into my blog posts. In my mind, the ideal solution should be able to recognize foreign GitHub links in a Markdown file, fetch and insert them into the file as code blocks with the appropriately highlighted syntax. Because I also do not always want to insert the entire foreign file, I want to be able to specify that only a subset of the file should be inserted.

There are a few approaches I could think of:

- Manually copy the code from those foreign repos and paste it into Markdown or save it in a local file and use some tool to inline it into Markdown at build time. This approach is problematic because getting the raw content of each GitHub file through the GitHub web UI can be tedious over time. Moreover, manually maintaining the copied code or file can be error-prone.

- Use

gitsubmodules to put each foreign repo from which I want a file inside the blog’s repo. This feels too heavy-handed to me because:gitsubmodules can be tricky to manage even in the best of times and especially so in these use cases:- I want to use files from multiple different commits of the same foreign repo.

Do I have to keep multiple

submodules of that foreign repo? - I want to refer to different foreign repos among different branches of the blog repo. (I follow the git flow model and compose different blog posts on different feature branches.) Switching from branch A to branch B will cause the foreign repos used by branch A to show up as “untracked files” in branch B.

- I want to use files from multiple different commits of the same foreign repo.

Do I have to keep multiple

- It wastes space and download time in local development if I just want a single file from a foreign repo.

- Author regular GitHub URLs in my Markdown but use some tool to fetch and insert the code for those foreign GitHub files into my Markdown.

I started looking around and couldn’t find any tool that does exactly what I want.

The ones that came close are:

-

@mosch/gatsby-source-githubcan only fetch from a single foreign repo. If I want to use files from multiple repos across different posts (which I definitely will), I’ll have to create multiple instances of that plugin, which will add a lot of bloat to my Gasby config over time. -gatsby-source-githubonly fetches details about repos rather than the files inside them.

Due to this lack of off-the-shelf solutions, I decided to write my own tool.

Specifications

Now that I’ve decided to write my own tool, I have to make a few design decisions.

I decided to not add new language constructs to Markdown to indicate the locations of GitHub links to be processed because I don’t know how to do so without creating conflicts with the existing Markdown language (which is actually not unambiguously-specified) and because I don’t know how to modify remark’s parser.

Rather, I prefer to sandwich the intended GitHub links between special embedding markers that my tool can then easily detect and process.

The embedding markers should unlikely be a phrase or word that occurs during normal written text and should be user-configurable because one person’s special marker may occur regularly in another person’s writing.

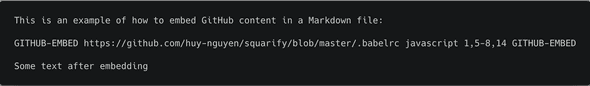

For example, if the embedding marker is GITHUB-EMBED, the tool should be able to convert the following Markdown input

into a Markdown code block with the appropriate language setting (

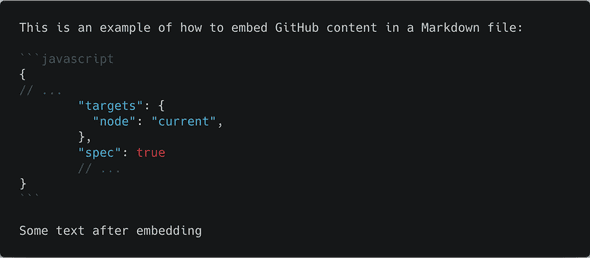

into a Markdown code block with the appropriate language setting (javascript) and appropriate subset of lines (lines 1, lines 5 through 8, and line 14) like this:

This result can then optionally be further processed by a syntax highlighter such as PrismJS.

This result can then optionally be further processed by a syntax highlighter such as PrismJS.

I decided to make the language name and line ranges optional because they are strictly not needed to embed the GitHub content into the Markdown output. However, I did decide to make the language have higher priority than the line ranges (i.e. to specify a line range, you need to specify a language but not vice versa) because I consider specifying line ranges to be a more advanced use case than specifying a language.

Because Gatsby uses gatsby-transformer-remark (which in turn uses remark) to process Markdown content into HTML, I plan to write an Abstract Syntax Tree (AST) transformer for remark to perform those core tasks.

This transformer will form the core of two thin wrappers:

- A

gatsby-remark-transformerplugin, which is used to render some of the GitHub code you see here (so meta!). - A

remarkplugin.

Because a remark plugin is more widely usable than a gatsby-transformer-remark plugin, I’ll create the remark plugin first and make the gatsby plugin accept the remark plugin as a dependency.

In addition, testing a remark plugin is also a lot easier than testing a gatsby-transformer-remark plugin because testing the latter requires setting up a full-blown GatsbyJS site.

Plan of action

The core tasks that this plugin have to accomplish are: 1. Recognize areas in Markdown that contain links to GitHub files. 2. Fetch the content of those files from GitHub. 3. Replace those areas with GitHub content.

To keep the articles to a reasonable length, I will split this tutorial into four parts:

In part one of this tutorial, I will show you how to perform task 2 i.e. fetching content from GitHub using their REST API.

In part two, I will show you how to perform task 1 by introducing you to ASTs and experiment with some basic AST manipulations.

In part three, I will walk you through task 3: combining data fetching and AST manipulation to transform GitHub URLs in Markdown files to their corresponding code blocks. I will also show you how to publish our

remarkplugin to NPM.In part four, I will show you how to use the package created in part three to create, test and publish a plugin for

gatsby-transformer-remark.

Along the way, I’ll also point out some best practices in developing and testing npm packages.

Technical notes

In this tutorial, I will write production code (i.e. code that performs actual library functionality, in contrast to test code) in TypeScript. It is a great language that I’ve used at work every day for more than two years. Besides exciting the functional programing fanboy in me, TypeScript’s static type checking also helps us avoid error by enforcing contracts between the package’s constituent functions and helps us better communicate these contracts to potential contributors.

However, I will write test code in regular JavaScript. Test code should be readable to non-TypeScript users because I believe that tests should communicate actual use cases and behaviors (intended cases, corner cases and error paths) of various subparts and the whole of a library. I have found that to non-TypeScript users, its type annotation introduces unnecessary clutter to the test code, which hinders their comprehension of it. I have also found that having to satisfy the type system slows me down in writing tests. Writing tests is something we all have to make a conscious effort to do and, as such, should be kept as frictionless as possible.

Which brings me to my choice of test framework: jest.

Having tried to wrangle many different testing tools to work together to test TypeScript code with coverage in the past (such as the crazy combination of mocha + karma + istanbul + webpack), I appreciates jest for making TypeScript testing very straightforward.

jest’s watch mode is a true delight and it makes collecting test coverage from TypeScript effortless.

In my day-to-day work, I try to use new language features in my code as much as possible.

As such, in this tutorial, I will use the fetch API to make AJAX calls.

fetch doesn’t exist in NodeJS but the node-fetch package provides the same API.

I will also use the new async/await syntax a lot because it makes asynchronous code much more readable and less error-prone than writing chains of Promises.

To maintain a consistent code style, I’ll lint my JavaScript code with eslint and TypeScript code with tslint.

Because I’m also a stickler for good Git commit messages, I’ll use commitlint to enforce a widely used convention which will come in handy when I eventually need to write release notes when the package is published to NPM.

After checking out any commit, please run yarn to install all the dependencies before running any command.

To run tests, run npm run test. To run test in “watch” mode, run npm run test:watch.

To see how the above tools work together, take a look at the state of the repo after the initial setup.

Fetch content from GitHub

GitHub provides a dedicated REST API endpoint to access the content of all repos:

1GET /repos/:owner/:repo/contents/:path

with an optional ref parameter pointing to the commit/branch/tag. The good first step in using any web API is to try sending out a few small and quick requests to check if the responses match our expectations. For example, to obtain the content of the file https://github.com/huy-nguyen/squarify/blob/master/.babelrc, issue the following curl command in the terminal:

1curl https://api.github.com/repos/huy-nguyen/squarify/contents/.babelrc?ref=master

whose response looks like:

1{2 "name": ".babelrc",3 "path": ".babelrc",4 // ...5 "type": "file",6 "content": "ewogICJwcmVzZXRzIjogWwogICAgWwogICAgICAiZW52IiwgewogICAgICAg\nICJ0YXJnZXRzIjogewogICAgICAgICAgIm5vZGUiOiAiY3VycmVudCIsCiAg\nICAgICAgfSwKICAgICAgICAic3BlYyI6IHRydWUKICAgICAgfQogICAgXQog\nIF0sCiAgInBsdWdpbnMiOiBbCiAgXQp9Cg==\n",7 "encoding": "base64",8 // ...9}

Thus, we know that we want to decode the base64-encoded content key from the API response to retrieve a file’s content.

In TypeScript, our fetching function should look like this:

1/**2 * https://github.com/huy-nguyen/remark-github-plugin/blob/f8eb4781/src/fetchGithubContent.ts3 */4import fetch from 'node-fetch';56export const fetchGithubFile = async () => {7 // tslint:disable-next-line:max-line-length8 const url = 'https://api.github.com/repos/huy-nguyen/squarify/contents/.babelrc?ref=d7074c2c91cfceeb9a91bd995a7f92a1e6702886';9 const response = await fetch(url);10 const {content: base64Content} = await response.json();11 const contentAsString = Buffer.from(base64Content, 'base64').toString('utf8');12 return contentAsString;13};

While developing libraries, it’s important to add unit tests early and often to ensure that the code we write actually perform their intended function and to prevent regressions along the way. In that spirit, I write the following test to verify that fetching works properly by checking if the content of the first two lines in the fetched content match what we saw in the web UI:

1// https://github.com/huy-nguyen/remark-github-plugin/blob/f8eb4781/src/__tests__/fetchGithubContent.js2// ...3test('Should succeed with sample request', async () => {4 const actual = await fetchGithubFile();5 expect(actual.startsWith('{\n "presets": [')).toBe(true);6});

After this step, the repo should look like this1.

Add authentication to fetches

Like many public-facing APIs, GitHub’s imposes a cap of 60 unauthenticated calls/hour.

To avoid this cap, we need to attach an authentication token to every call, which increases the limit to 5,000 per hour.

Per GitHub’s documentation, the simplest way to do so is using a personal access token.

For this tutorial, a token with the public_repo scope is sufficient.

After obtaining that token, the next step is to provide it to our test code (eventual consumers of this package will have to provide their own token).

The challenge here is to keep the token out of version control2 while still making it available to the code, both in our local development environment and on a remote continuous integration (CI) server that we will use later.

The standard solution is to make the token available as an environment variable.

For example, if we set the environment variable FOO to be bar then in NodeJS, our JavaScript code can access it by reading the process.env.FOO variable.

To set an environment variable locally, we will use the (very creatively named) dotenv package, a popular solution for setting environment variables in NodeJS code.

When initialized, it converts any someKey=someValue pair in .env files in the project directory into an environment variable someKey whose value is the string someValue.

Because our tests will read the token from the GITHUB_TOKEN environment variable (an arbitrarily chosen name3), we should store the token in an .env file (which should be excluded from version control) at the root of our project directory like this

1# Content of .env file in root project directory:2GITHUB_TOKEN=yourGitHubToken

Then we’ll configure jest to run a setup file before running any tests:

1// https://github.com/huy-nguyen/remark-github-plugin/blob/c49d48dd/jest.config.js2module.exports = {3// ...4 setupFiles: [5 '<rootDir>/src/setupTests.js',6 ],7};

and then initialize dotenv in that setup file by invoking require('dotenv').config():

1// https://github.com/huy-nguyen/remark-github-plugin/blob/c49d48dd/src/setupTests.js2require('dotenv').config();

From now on, any tests that needs the token can just read it from the process.env.GITHUB_TOKEN variable.

As a minor improvement over the last step, let’s also allow the fetch function to accept the GitHub URL as a parameter instead of hard coding it.

To achieve this, we need to parse the owner, repo, path and ref information from any GitHub URL by using the github-url-parse package.

The fetch function now accepts both a user-facing GitHub URL and an access token as parameters.

1// https://github.com/huy-nguyen/remark-github-plugin/blob/64cffd7/src/fetchGithubContent.ts2import parse from 'github-url-parse';3// ...4export const fetchGithubFile = async (githubUrl: string, token: string): Promise<string> => {5 const parseResult = parse(githubUrl);6 if (parseResult !== null) {7 // If the provided URL is a valid GitHub URL:8 const {branch, path, repo, user} = parseResult;9 const fetchUrl = `https://api.github.com/repos/${user}/${repo}/contents/${path}?ref=${branch}`;1011 let response: Response;12 try {13 response = await fetch(fetchUrl, {14 headers: {15 Authorization: `token ${token}`,16 },17 });1819 // ...2021 } catch (e) {22 throw new Error(e);23 }24 // ...25 }26};

Our test can now be updated to provide both a token and URL to the fetch function.

We put the token in the outermost scope in the test file because it will be used by other tests in the same file:

1// https://github.com/huy-nguyen/remark-github-plugin/blob/64cffd79/src/__tests__/fetchGithubContent.js2const token = process.env.GITHUB_TOKEN;34test('Should succeed with valid GitHub URL', async () => {5 const actual = await fetchGithubFile(6 'https://github.com/huy-nguyen/squarify/blob/d7074c2/.babelrc',7 token,8 );9 expect(actual.startsWith('{\n "presets": [')).toBe(true);10});

Handling errors

When dealing with network requests, we also need to handle all the possible ways that a request can fail:

- The URL is not a valid GitHub URL.

- The URL is a syntactically valid GitHub URL but points to a non-existent resource on GitHub.

- The URL is a syntactically valid GitHub URL but points to GitHub resource that is not a file e.g. a directory.

- The URL is a syntactically valid GitHub URL and points to a valid file but the network request failed e.g. no internet connection.

Because the first failure is already taken care of by the parseResult !== null check above, let’s put in error handling for the latter three cases.

Here’s out complete fetch function:

1/**2 * File https://github.com/huy-nguyen/remark-github-plugin/blob/64cffd7/src/fetchGithubContent.ts3 */4import parse from 'github-url-parse';5import fetch, {6 Response,7} from 'node-fetch';89export const fetchGithubFile = async (githubUrl: string, token: string): Promise<string> => {10 // Attempt to parse the URL:11 const parseResult = parse(githubUrl);12 if (parseResult !== null) {13 // If the provided URL is a valid GitHub URL...14 const {branch, path, repo, user} = parseResult;15 const fetchUrl = `https://api.github.com/repos/${user}/${repo}/contents/${path}?branch=${branch}`;1617 let response: Response;18 try {19 // ... send out AJAX request:20 response = await fetch(fetchUrl, {21 headers: {22 Authorization: `token ${token}`,23 },24 });2526 // If AJAX call succeeds:27 const json = await response.json();28 if (response.ok === true) {29 // If requested URL actually exists on GitHub:30 if (json.type === 'file') {31 // If fetched content is a file instead of directory:32 const contentAsString = Buffer.from(json.content, 'base64').toString('utf8');33 return contentAsString;34 } else {35 throw new Error(githubUrl + ' is not a file');36 }37 } else {38 // Try to create a nice error message if content doesn't exist for given URL:39 const {statusText} = response;4041 let errorMessage;42 if (json.message) {43 errorMessage = `${statusText}: ${json.message}`;44 } else {45 errorMessage = statusText;46 }47 throw new Error(errorMessage);48 }4950 } catch (e) {51 throw new Error(e);52 }53 } else {54 throw new Error(githubUrl + ' is not an accepted GitHub URL');55 }56};

It’s very important to test error paths because they are usually the least run portions of a code base. Any bugs lurking in them are the least likely to be discovered through regular usage. Here are the tests for all the four ways fetching can fail:

1// https://github.com/huy-nguyen/remark-github-plugin/blob/64cffd79/src/__tests__/fetchGithubContent.js2const token = process.env.GITHUB_TOKEN;3test('Should throw if called with invalid GitHub URL', async () => {4 expect.assertions(1);5 try {6 await fetchGithubFile('example.com', token);7 } catch (e) {8 expect(e.message).toMatch(/is not an accepted GitHub URL/i);9 }10});1112test('Should throw when given URL points to non-existent GitHub content', async () => {13 expect.assertions(1);14 try {15 await fetchGithubFile(16 'https://github.com/huy-nguyen/squarify/blob/d7074c2/someFile',17 token18 );19 } catch (e) {20 expect(e.message).toMatch(/Not Found/i);21 }22});2324test('Should throw when given URL points to a GitHub directory', async () => {25 expect.assertions(1);26 try {27 await fetchGithubFile(28 'https://github.com/huy-nguyen/squarify/blob/d7074c2/src',29 token30 );31 } catch (e) {32 expect(e.message).toMatch(/is not a file/i);33 }34});

1// https://github.com/huy-nguyen/remark-github-plugin/blob/64cffd79/src/__tests__/fetchGithubContentFail.js2jest.mock('node-fetch');3const token = process.env.GITHUB_TOKEN;45test('Should handle failed AJAX request to valid GitHub URL', async () => {6 expect.assertions(1);7 fetch.mockRejectedValue(new Error('Network error'));8 try {9 await fetchGithubFile(10 'https://github.com/huy-nguyen/squarify/blob/d7074c2/.babelrc', token11 );12 } catch (e) {13 expect(e.message).toMatch(/Network error/);14 }15});

Note that in the last test (for handling no internet connection), we mock out the entire node-fetch module so that it always return a rejected Promise. The ability to so easily mock out Node modules is one of the best features of jest.

After this last step, the repo should look like this.

This is the end of part one. Click here for part two.

- Actually the

fetchcall in the snippet above is slightly different from the the repo snapshot because in the repo, I used the wrongfetchparameter (branchinstead of the correctref). This bug was fixed in a latter commit.↩ - In fact, Twelve-Factor App says it best: “A litmus test for whether an app has [strict separation of config from code] is whether the codebase could be made open source at any moment, without compromising any credentials.”↩

- Because the GitHub token used for testing in this tutorial only needs read permission, I deliberately chose a different variable name from the

GH_TOKENenvironment variable, which contains a writable GitHub token used by various tools to push changes to GitHub repos. For example, in this project,semantic-releaseusesGH_TOKENto write release tags to the GitHub repo,codecovto append test coverage reports to pull requests andgreenkeeperto open pull requests to update dependencies. Separating these two GitHub tokens illustrates the principle of least privilege.↩