Writing plugins for remark and gatsby-transformer-remark (part 3)

Welcome to part three of my three-part tutorial on writing plugins for remark and gatsby-transformer-remark.

In part one, we created the well-tested functionality to fetch content from GitHub.

In part two, we learned how to manipulate a Markdown’s Abstract Syntax Tree (AST) to transform one Markdown language construct into another.

In this part, we will combine those two ingredients to achieve our overall objective: embed GitHub files into locations in Markdown where their content is requested and finally create a plugin that can be used in a GatsbyJS site.

Asynchronous remark transformer

At some point inside our transformer, we’ll have to make a fetch call to GitHub to obtain the content of the GitHub URL.

At first try, going off our final transformer in part two, the new transformer may look something like this:

1export const transform = ({marker}: IOptions) => (tree: any) => {2 const visitor = (node: any) => {3 const checkResult = checkNode(marker, node);4 if (checkResult.isCandidate === true) {5 // ... set `type`, `children` and `lang` here6 const fileContent = /* fetch GitHub content here */7 // Insert GitHub content into code block:8 node.value = fileContent;9 }10 };1112 visit(tree, 'paragraph', visitor);13};

However, this will not work. Because a fetch call is asynchronous, the return value of that call will be a Promise.

Instead of showing the actual GitHub code, our embedded code block will look something like this1:

The reason is two-fold:

1. Our transformer doesn’t wait for the fetch call to execute successfully before creating the code block.

2. remark doesn’t know that our transformer is asynchronous.

Let’s fix problem 2 first because it’s easier.

remark’s plugin documentation for transformer says that:

If a promise is returned, the function is asynchronous, and must be resolved (optionally with a Node) or rejected (optionally with an Error)

As such, we can now modify the final transformer in part two so that it returns a Promise:

1// https://github.com/huy-nguyen/remark-github-plugin/blob/50b94188/src/transform.ts2export const transform = ({marker}: IOptions) => (tree: any) => new Promise((resolve) => {3 const visitor = (node: any) => {4 // ... modify node as needed5 };67 visit(tree, 'paragraph', visitor);8 resolve();9});

Note that we have moved the entire function body inside the Promise’s executor (the function (resolve) => {/* */} passed into the Promise constructor).

remark will now wait for our transformer to finish (i.e. when we call resolve) before calling other plugins.

After this step, the repo looks like this.

async, but where?

Now let’s tackle problem 1 above by making our transformer wait for the fetches to finish.

But which function should do the waiting: the visitor or the Promise executor?

Because fetching happens inside visitor, our first instinct is to make visitor wait, something like this:

1export const transform = ({marker, token}: IOptions) => (tree: any) => new Promise((resolve) => {2 const visitor = async (node: any) => {3 // ...4 // Setting `type`, `children` and `lang`...5 const fileContent = await /* fetch GitHub content */6 node.value = fileContent;7 };89 visit(tree, 'paragraph', visitor);1011 resolve();12});

However, that doesn’t work. The Markdown result is:

The reason is that the visit function that we use to walk the tree can only execute its callback (visitor in this case) synchronously.

Our challenge now is to keep our visitor function synchronous but still allow our transformer to perform asynchronous fetches.

So far our AST transformation consists of a single pass: whenever we see a pair of valid embedding markers, we immediately process the GitHub link sandwiched between them into a code block. The solution to our challenge is to split the transformation into two passes:

- In the first pass, we use the

visitorfunction to walk the AST while scanning the Markdown file for GitHub embedding markers. For eachparagraphnode that contains a valid pair of embedding markers, instead of processing the contained GitHub link right away, we record that paragraph’s location in the AST, its link, language and line range requested and store this information in a list callednodesToChangeoutside the scope ofvisitor. This action byvisitoris entirely synchronous. - In the second pass, we iterate through the nodes in

nodesToChangeand, for each one, fetch and insert the GitHub content into that node. This fetching happens inside thePromiseexecutor’s main body, which is allowed to be asynchronous.

Here’s the first pass where we record all paragraph nodes that need to be transformed:

1// https://github.com/huy-nguyen/remark-github-plugin/blob/4705d924/src/transform.ts2export const transform = ({marker, token}: IOptions) => (tree: any) => new Promise(async (resolve) => {3 const nodesToChange: INodeToChange[] = [];4 const visitor = (node: any) => {5 const checkResult = checkNode(marker, node);6 if (checkResult.isCandidate === true) {7 const {language, link, range} = checkResult;8 nodesToChange.push({node, link, range, language});9 }10 };1112 visit(tree, 'paragraph', visitor);13 // ...14});

and here’s the second pass where we actually transform those nodes:

1// https://github.com/huy-nguyen/remark-github-plugin/blob/4705d924/src/transform.ts2import {3 fetchGithubFile,4} from './fetchGithubContent';56export const transform = ({marker, token}: IOptions) => (tree: any) => new Promise(async (resolve) => {7 const nodesToChange: INodeToChange[] = [];8 // ...9 for (const {node, link, language} of nodesToChange) {10 node.type = 'code';11 node.children = undefined;12 node.lang = (language === undefined) ? null : language;13 const fileContent = await fetchGithubFile(link, token);14 node.value = fileContent;15 }16 resolve();17});

After this step, our repo looks like this.

The tests have been updated to assert that the generated code blocks do contain the corresponding GitHub file.

If you run npm run test at this point, all the tests should pass, indicating that we’re fetching GitHub content and manipulating the AST correctly.

Select a subset of a file to embed

The final requirement that our package has yet to satisfy is allowing the user to embed only a subset of a GitHub file.

For ease of testing, we’ll create a dedicated function to do the extraction called extractLines.

In extractLines, after parsing the number range notation (e.g. 1-3,5,8-10) using parse-numeric-range into an array of line numbers, we split the fetched file’s raw text into lines2, pick out the lines that need to be embedded and reconstitute the lines into a file:

1// https://github.com/huy-nguyen/remark-github-plugin/blob/57ef4dd4/src/extractLines.ts2import {parse} from 'parse-numeric-range';34export const extractLines = (rawFileContent: string, range: string): string => {5 const lineTerminator = '\n';6 const lines = rawFileContent.split(lineTerminator);7 const rawLineNumbers = parse(range);8 const lineNumbers = rawLineNumbers.filter(lineNumber => lineNumber > 0);910 const result = [];11 for (const lineNumber of lineNumbers) {12 const retrievedLine = lines[lineNumber - 1];13 if (retrievedLine !== undefined) {14 result.push(retrievedLine);15 }16 }1718 return result.join(lineTerminator);19};

Then our transformer can use this new extractLines function if line ranges are specified:

1// https://github.com/huy-nguyen/remark-github-plugin/blob/57ef4dd4/src/transform.ts2import {3 extractLines,4} from './extractLines';56export const transform = ({marker, token}: IOptions) => (tree: any) => new Promise(async (resolve) => {7 for (const {node, link, language, range} of nodesToChange) {8 node.type = 'code';9 node.children = undefined;10 node.lang = (language === undefined) ? null : language;11 const rawFileContent = await fetchGithubFile(link, token);1213 let fileContent: string;14 if (range === undefined) {15 fileContent = rawFileContent;16 } else {17 fileContent = extractLines(rawFileContent, range);18 }1920 node.value = fileContent;21 }22 resolve();23});

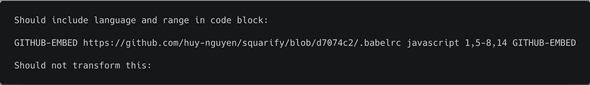

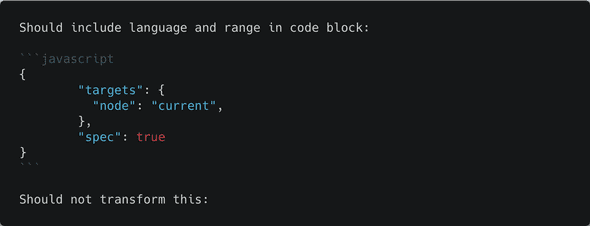

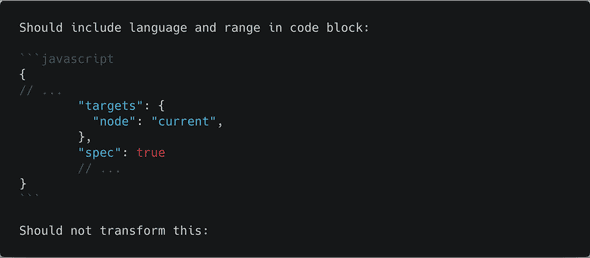

After this step, the repo looks like this. As usual, all the tests should pass if you run them. At this point, the following input with line ranges:

should be transformed into this output:

However, we can go a step further and insert “ellipsis comments” (a term I coined for this tutorial) to indicate which portions of the GitHub file are not shown:

Because implementing this “nice-to-have” functionality is a bit tedious and not at all essential to the functionality of this library,

I’ll skip showing it but you can see the full implementation in extractLines.ts,

wrapInComment.ts

and transform.ts.

At this point (published as version 1.0.0), our remark plugin has all the features that we laid out in part one of this tutorial3.

Build, test and publish to npm

Developing fully functional code isn’t the end of our story. We need to publish the plugin to npm for distribution to users and follow some best practices to make sure our package is a good citizen of the JavaScript ecosystem. After developing and publishing a few packages on npm, here are my guidelines:

- Development should follow the git flow model.

Most work should be performed on feature branches and then incorporated into the code base through pull requests (PR) to a “main” branch.

By convention,

masteris the main branch used for production anddevelopis for staging (if a library is complex enough). No-one should directlygit pushto eithermasterordevelop. - The build and bundling process must be done by a Continuous Integration (CI) service, such as CircleCI (which we use) or Travis.

The consistent environment provided by CI services ensures that our builds are reproducible and helps us avoid the “works on my machine” problem during collaboration with other developers.

The CI service should be configured to make a new build on every

git pushor at least every new PR. This build may or may not be published to npm depending on branch setting. Because our package is fairly simple, we only publish frommaster. - There must be automated unit tests and integration tests. In “library” types of packages (in contrast to “application” type of packages), such as ours, the coverage should be as close to 100% of the production code as possible. Code coverage should be tracked over time to ensure new features are adequately tested. This package uses codecov.

- We must be able to build a package in one step with a single shell command e.g.

npm run build. Build artifacts should not be checked into version control. - Write source code using the latest language features to promote readability and simplicity but publish code that has been transpiled to the “lowest common denominator” of all supported consumers. In this project, we write production code in TypeScript, whose static typing annotations serve as a built-in, machine-checked form of documentation. In addition to type checking, TypeScript also transpiles TypeScript to modern JavaScript. We then use Babel to “transpile away” modern JavaScript language features that our lowest supported NodeJS version cannot understand.

- Because JavaScript can be run in many different environments (server-side, client-side, with or without a build tool or transpiler), for each version of a package, there should be multiple builds for the convenience of that package’s consumers4.

Consumers of a package should not have to transpile its code because transpilation should be done by the package’s author.

- An ES module build, mostly intended for build tools that can perform tree-shaking such as Rollup or Webpack.

However, with the coming advent of native support for ES modules in NodeJS, this build may also be consumed by NodeJS in the future.

It’s OK to have loose files in this build.

The choice of directory name is up to you but

esis a good one. It should be referenced under themodulefield inpackage.json(akapkg.modulein current parlance). - A CommonJS build, mostly intended for NodeJS users but can also be used by Webpack/Rollup.

A consumer of this version should be able to

require()and use it right away. The convention is to put it in thelibdirectory and reference it inpkg.mainfield. It’s OK to have loose files in this build. - A single-file, minified build to be consumed by the browser, if applicable.

This build should be runnable if loaded directly into the browser as a

scripttag from a URL. This file should follow the Universal Module Definition (UMD) format, meaning it can be consumed by either a CommonJS module loader (such as NodeJS) or Asynchronous Module Definition (AMD) loader (such as RequireJS). By convention, we should put it in thedistdirectory and reference it inpkg.browserfield. This build should also expose the package’s top-level export through a global variable. For example, you can accessjQuery’s top-level export throughwindow.$. Even though thisremarkplugin cannot be used in a browser, we still make adistbuild for demonstration purpose but not set thepkg.browserfield.

- An ES module build, mostly intended for build tools that can perform tree-shaking such as Rollup or Webpack.

However, with the coming advent of native support for ES modules in NodeJS, this build may also be consumed by NodeJS in the future.

It’s OK to have loose files in this build.

The choice of directory name is up to you but

- The single-source multiple-builds rule usually implies that we need to use a build tool like Webpack or Rollup. Conventional industry wisdom says that Rolllup is better suited for “library” types of packages (such as this one) whereas Webpack is better for “application” type.

- Packages should obey semantic versioning (semver).

Because our commit messages adhere to the conventional changelog standard, we’re able to use

semantic-releaseto enforce semver. After every pull request tomaster,semantic-releasescans commit messages to decide if there have been new features, bugs fixes or breaking changes since the last publication to npm. If so, a new version will be published. - As many dependencies as possible should be locally scoped to the package’s directory and declared in

package.json. This is easy for functional packages, i.e. dependencies that yourequireorimportin your code, but can get a bit tricky for build tools, which you call on the command line, such as Webpack, Rollup or TypeScript. However, even the latter group can be locally scoped to the package’s directory by invoking them through npm scripts. - Use dependency lock files (

package-lock.jsonoryarn.lock) to ensure builds in all build environments use the exact same dependency tree. Also ensure that the type of each dependency is specified correctly. - Because we cannot count on all npm packages to obey semver, try to pin the exact versions of as many dependencies as possible.

Avoid

^or~in version numbers if possible. However, if you follow this rule, do use an automated service (e.g. greenkeeper) to get PRs when new versions of our dependencies are released. If our unit and integration tests are good, they should warn us if a new version of a dependency breaks our package. - All API keys, access tokens and other types of credentials should be kept in environment variables and never checked into version control.

Create plugin for gatsby-transformer-remark

At this point, we’ve done the hard part, which is writing the logic for all the AST manipulations.

Creating a plugin for gatsby-transformer-remark is actually quite easy.

All we have to do is create a typical npm package and in that package’s main file (/index.js by convention) invoke the transform function exported from remark-github-plugin.

Per gatsby-transformer-remark’s documentation, this main file should export a function that takes in a Markdown AST together with relevant options and return the modified AST:

1// https://github.com/huy-nguyen/gatsby-remark-github/blob/master/index.js2const {transform} = require('remark-github-plugin');3const plugin = ({markdownAST}, options) => transform(options)(markdownAST);45module.exports = plugin;

The result is contained this repo.

[object Promise]is the result of calling.toStringon aPromise.↩- An obvious enhancement we can do here is to detect whether the line endings is Unix (

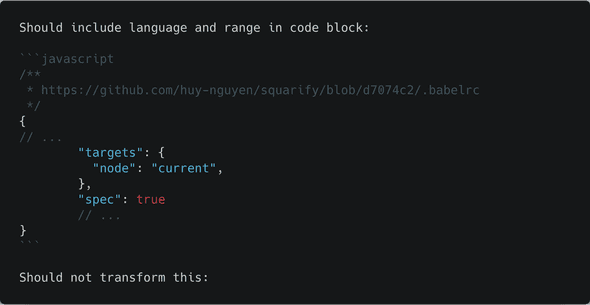

\n) or DOS (\r\n).↩ - After this commit, I didn’t make many minor changes except for a small new feature that adds the URL of the embedded file into a comment at the start of the code blocks, like this:

↩

↩ - You can read more about it here.↩